FDA Deploys ‘Elsa’ AI to Tackle Flood of High-Tech Medical Devices

The U.S. Food and Drug Administration is implementing a sweeping new regulatory framework for AI-enabled medical devices, as the number of approved products has now surpassed 1,000. This rising tide of technology is pressing the agency to modernize its approach to ensuring patient safety.

At the heart of the strategy is a draft guidance issued on January 6, 2025, which champions a “total product lifecycle” approach. This requires manufacturers to account for a device’s performance from initial design to post-market updates. It represents a significant shift from traditional, more static medical device oversight.

To streamline its own internal processes, the FDA also recently launched ‘Elsa,’ a generative AI tool for its staff. Housed in a secure cloud environment, the tool is designed to accelerate reviews and analysis. One official cited an anecdotal case where a task that once took days was cut to just six minutes.

However, this rapid internal adoption has been met with some skepticism from agency employees who question its immediate impact on complex product reviews, especially amid recent staffing cuts.

Managing a Moving Target

A core challenge for regulators is that many AI systems are designed to learn and change over time, a phenomenon that can lead to “algorithmic drift,” where performance degrades as new data is encountered.

To address this, the FDA is finalizing rules for “Predetermined Change Control Plans” (PCCPs). These plans enable manufacturers to obtain pre-authorization for specific, planned modifications to their AI models, eliminating the need to submit a new application for every update.

This approach acknowledges the dynamic nature of AI. Still, it places a greater onus on companies to rigorously test and monitor their products throughout their time on the market, moving toward a model of continuous oversight rather than a single, point-in-time approval.

Balancing Innovation and Equity

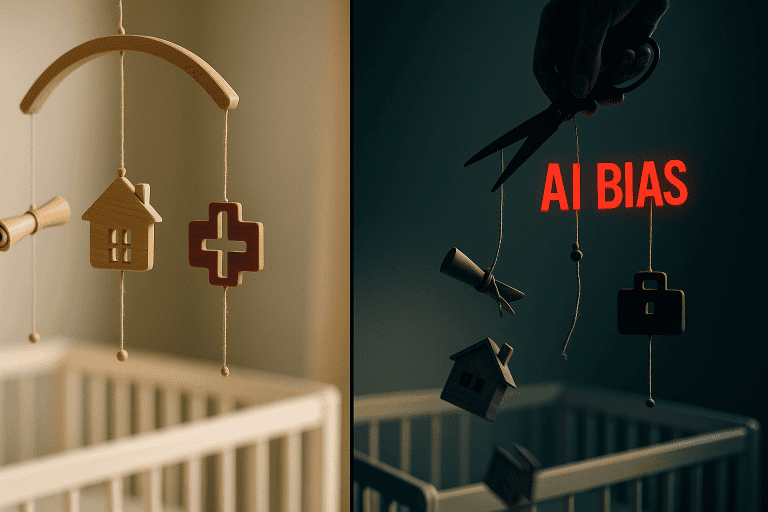

While the potential of AI in medicine is immense, so are the risks. A primary concern is algorithmic bias, where AI systems perpetuate or even amplify existing health disparities.

If training data underrepresents certain demographic groups, an AI tool may be less accurate for those populations. This has been a documented concern, with devices like pulse oximeters showing reduced accuracy on darker skin tones. The FDA’s new lifecycle guidance requires manufacturers to use diverse, representative data and evaluate device performance across different subgroups.

Data security is another critical focus. The agency’s guidelines highlight AI-specific threats, such as “data poisoning,” where malicious actors corrupt training data to compromise a model’s integrity.

To build trust, the FDA is also pushing for greater transparency. Its recommendations encourage companies to produce clear, public-facing “model cards” that explain what an AI device does, how it performs, and what its limitations are.

An Agency Under Pressure

The sheer volume and novelty of AI submissions are straining the agency’s resources. As of March 2025, 1,016 AI/ML devices have been authorized, with a growing number being cleared through the 510(k) pathway, which is designed for devices that are “substantially equivalent” to an existing product.

Even these incremental changes, however, introduce new complexities that traditional review processes were not designed to handle.

More advanced systems, like Large Language Models (LLMs), present an even greater challenge. Their ability to generate unpredictable outputs, including plausible but incorrect information known as “hallucinations,” raises fundamental questions about how to define and regulate them.

Some research has shown LLMs can provide device-like clinical advice even when instructed not to, blurring the lines of regulation.

This evolving landscape suggests the FDA’s work is far from over, requiring sustained collaboration between the agency, industry, and healthcare providers to ensure that AI fulfills its promise to improve public health safely and equitably.

Key Takeaways

- The FDA is overhauling its regulations as the number of authorized AI-enabled medical devices exceeds 1,000.

- New rules focus on the entire product lifecycle and allow for pre-approved modifications to adaptive AI models.

- Major concerns being addressed include algorithmic bias that can exacerbate health disparities, cybersecurity threats, and the “black box” nature of complex AI systems.